High-Level Project Summary

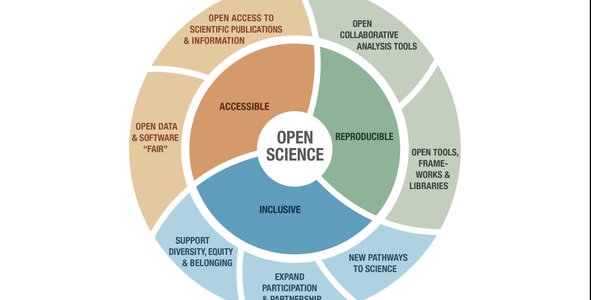

While there is increasing emphasis on “open science,” how this is measured and what it means for open science to be successful is not clearly defined. We posit that accessibility of science and scientific outputs is a fundamental component of open science and propose a method for scientists, funding agencies, and educators to understand the accessibility and impact of research. Our framework, Research Effectiveness and Accessible Science of NASAapps (REASON), allows for reflection and understanding of how processes and products of science can be made more inclusive and allows educators, funders and research organizations to create, use and disseminate accessible research.

Link to Final Project

Link to Project "Demo"

Detailed Project Description

Research Effectiveness and Accessible Science of NASAapps (REASON) is a science assessment tool that measures the accessibility and effectiveness of scientific research and related outputs. Currently, while there is increasing emphasis on “open science”, how this is measured and what it means for open science to be successful is not clearly defined. We posit that accessibility of science and scientific outputs is a fundamental component of open science and propose REASON as a means for scientists, funding agencies, and educators to understand the accessibility and impact of research. At a basic level, it is a framework for reflection and understanding of how processes and products of science can be made more inclusive. In a more pragmatic fashion, it can be applied to, for example, assist educators in identifying and selecting science resources from open science projects that meet the needs of learners who may have additional requirements in interacting with certain media or to identify for funding agencies and research organizations when their work is and is not accessible for certain users.

Accessible open science supports vulnerable and underserved populations. At a high level, these populations include those with physical and cognitive disabilities, but can also include people who are homeless, impoverished, senior citizens, immigrants, incarcerated, institutionalized and otherwise under-served minority groups that need assistive technologies or free, open credentials to access online resources; of particular concern is open access to online health and financial information. Without accessible research, output is nearly impossible for these individuals (students, researchers and the general public) to access the resources and services that may be available to them.

The W3C and other resources provide standardized tools and methodologies for assessing accessible technologies such as the W3C Web Content Access Guidelines (WCAG 2.1) whose four principles: findable, usable, understandable and robust ensure that everyone regardless of context or ability (including cognitive ability per version 2.1) is able to benefit from digital materials. Both GDPR and California’s CPRA data privacy laws require adherence to these standards. (CPRA names version 2.1 specifically) and all federal agencies must adhere to Section 508 accessibility guidelines if the ADA.

REASON is a matrix assessment tool for measuring the accessibility and effectiveness of scientific research and related outputs in the context of open science principles. The minimum viable product is essentially a checklist of accessibility elements plus a scoring matrix for different domains of effectiveness and impact which synthesize contemporary concepts from academia, industry, government, and advocacy around accessibility and research effectiveness. Scientific objects (such as research papers, application programming interfaces, data sets, public-facing educational resource pages) are assessed along the different categories of accessibility and effectiveness items. The tool does not provide a summative score but instead highlights specific areas of potential awareness regarding the equity and accessibility of the object. We hope that scientists, funding agencies, and educators (among others) may apply this assessment tool when creating and disseminating science to take steps to ensure inclusivity in science and science outputs.

We assessed pages from NASA’s Lunar and Planetary Institute (https://www.lpi.usra.edu/resources/lunar_orbiter/bin/lst_nam.shtml) as an applied example of using this tool (see more detailed description of methodology and findings in Space Agency Data section). Briefly, through application of REASON to these pages, we were able to identify potential areas for improvement in making these resources more accessible.

- Weights for different criteria

- Qualitative vs. quantitative measures

Based on our experiences in education and as educators, one concrete problem we identified in accessibility of science is the lack of an efficient way for educators to identify and ensure that educational materials in science that incorporate resources from open science projects can be used by learners of all abilities. Currently, educators may spend several hours on checking for accessibility issues and adapting these materials to fit learners’ needs. In the future, we imagine that an artificial intelligence-based application could facilitate the assessment of diverse resources for accessibility and potentially remedy certain issues (such as generating alt-text for images and figures or reformatting text and unstructured formats to be more readable by assistive software).

Other future directions are to continue refining and testing the assessment items using an iterative process involving multiple stakeholders. The goal is to have a tool that not only helps identify potential issues but also helps scientists and others improve accessibility in open science.

Space Agency Data

Our team used resources from from the Digital Lunar Orbiter Photographic Atlas of the Moon from the NASA Lunar and Planetary Institute (LPI) (https://www.lpi.usra.edu/resources/lunar_orbiter/bin/lst_nam.shtml). These images were evaluated for accessibility using automated accessibility testing tools and manual testing.

Our audit determined that there were several accessibility issues, notably, missing ALT text descriptions and empty links. Images found on the Lunar Orbiter Photographic Atlas were missing alternative text, making them unavailable to users who require assistive technology like screen readers. Images that were used as links could were missing labels that informed users of link destinations. These results support our theory that accessibility considerations need to be addressed as a metric for research.

Examples: (images at https://1drv.ms/w/s!Amw09CA5GCgjiX-ubOZ2HVOvFNxg)

Low Contrast Text – Landing Page

WCAG 2.1 Success Criterion 1.4.3 Contrast (Minimum) (Level AA): (Button)

Missing Alternative Text for Images – Image

WCAG 2.1 Success Criterion 1.1.1 Non-text Content (Level A)

Empty Links – Images

WCAG 2.1 Success Criterion 2.4.4 Link Purpose (In Context) (Level A)

Heading Structure – Landing Page

WCAG 2.1 Success Criterion 2.4.6 Headings and Labels (Level AA)

Hackathon Journey

We were each drawn to exploring accessibility and effectiveness of open science for different reasons (personally narrated below), but we worked together as a team to identify our interests and how they could fit together. We found common ground in the field of education and sought solutions that could be applied to this context. We set timelines for milestones of the endeavor and alternated between team meetings and independent work to balance progress in the hackathon with other commitments. We asked each other probing questions to push our ideas further and built on them with a “yes and” approach. When challenges arose, we aimed to anchor ourselves in the problem statement and end user for whom we imagine using this tool. Overall, we had a wonderful time! We’d like to thank the Space Apps event organizers and local lead for all the hard work and dedication to “make space” for space lovers around the world!

(Kevin) Space Apps was an opportunity for me—as someone who loves space but does not work professionally in the field—to reconnect with this passion and meet others who share it. There were many interesting challenges, but ultimately, I gravitated towards measuring open science since it was a broadly applicable topic and one which could reach many disciplines. It was great listening to, learning from, and working with the team on accessibility of science. I personally learned a lot about the different public-facing resources at NASA and about tools and methods used to assess accessibility and the effectiveness of science at large.

(Janet) Growing up in the days of Apollo 11 and hearing Neil Armstrong utter the memorable phrase “One small step for man. One giant leap for mankind.” was a factor in choosing the lunar orbiter database to test accessibility. As someone who has worked in education from K-12 to Higher education, my desire is to share that excitement with students of all abilities. Personally, I am grateful for the opportunity to work with the talented individuals on my team and for the experience gained from their collaboration through the SpaceApps Challenge.

(Noreen) As someone who has served as a mentor for SpaceApps in the past, this year I was inspired to create a team to address the Measuring Open Science challenge. My research interests include technology standards for identity and accessibility. I saw a way to introduce a scoring framework based on accessibility measures to complement traditional and more established alternative metrics. I originally looked at documents in the NASA STI Repository (NTRS) to see what the typical formats are and the general accessibility of these formats as well how easy it would be to transform a format to one that can be parsed by assistive technology and machine readers. A quick review of NTRS revealed primarily pdf documents, many of which are photocopies of old documents like technical reports from the 1960s, which are not accessible. I enjoyed the team discussions, and while I thought we would end up with a simple app prototype of an accessibility guidelines, the framework we came up with may be much more impactful in the long run.

(Brett) The space apps experience was an opportunity to learn about accessibility issues and think deeply about the benefits (and potential costs/risks) of the scientific research. We were further motivated by the potential scalability of our proposed solution and the opportunity to contribute to human progress.

References

For the prototype:

1. How do we measure the impact of research? Print link: https://www.rte.ie/brainstorm/2018/0417/955151-how-do-we-measure-the-impact-of-research/

2. MEASURING RESEARCH IMPACT Print Link: https://www.vu.edu.au/researchers/research-lifecycle/publish-disseminate-your-research/measuring-research-impact

For the accessibility test:

LPI Resources Digital Lunar Orbiter Photographic Atlas of the Moon

Image: I-136-M

Evaluation included the landing page and a linked image page using Web Content Accessibility Guidelines (WCAG) 2.1 standards, levels A, AA. The audit was completed in Chrome: Version 105.0.5195.127 (Official Build) (64-bit) (desktop only)

Tools used include:

- NonVisual Desktop Access (NVDA) version 2022.2.4 https://www.nvaccess.org/download/

- Wave Evaluation Tool version 3.1.6 https://wave.webaim.org/extension/

- Chrome Web Developer Toolbar version 0.5.4 https://developer.chrome.com/docs/devtools/

- TPGi Color Contrast Analyzer https://www.tpgi.com/color-contrast-checker/

- WebAIM Million Page Report https://webaim.org/projects/million/

- Web Content Accessibility Guidelines (WCAG) 2.1 standards, levels A, AA. https://www.w3.org/TR/WCAG21/

- Manual Test

Background Research and Literature Review:

"Web Accessibility for Designers." WebAIM: https://webaim.org/resources/designers/

"Tips for Accessibility Aware Research." W3c. https://www.w3.org/WAI/RD/wiki/Tips_for_Accessibility-Aware_Research

"Disability and Health Promotion". US Centers for Disease Control and Prevention.

https://www.cdc.gov/ncbddd/disabilityandhealth/infographic-disability-impacts-all.html

"U.S. Research and Development Funding and Performance: Fact Sheet". Congressional Research Service.

https://sgp.fas.org/crs/misc/R44307.pdf

REF Impact – UKRI Definition: https://www.ukri.org/about-us/research-england/research-excellence/ref-impact/

What's The Difference Between WCAG Level A, Level AA, and Level AAA? https://www.boia.org/blog/whats-the-difference-between-wcag-level-a-level-aa-and-level-aaa

Tags

#accessibility #openscience #reproducibility #researchmetrics #altmetrics